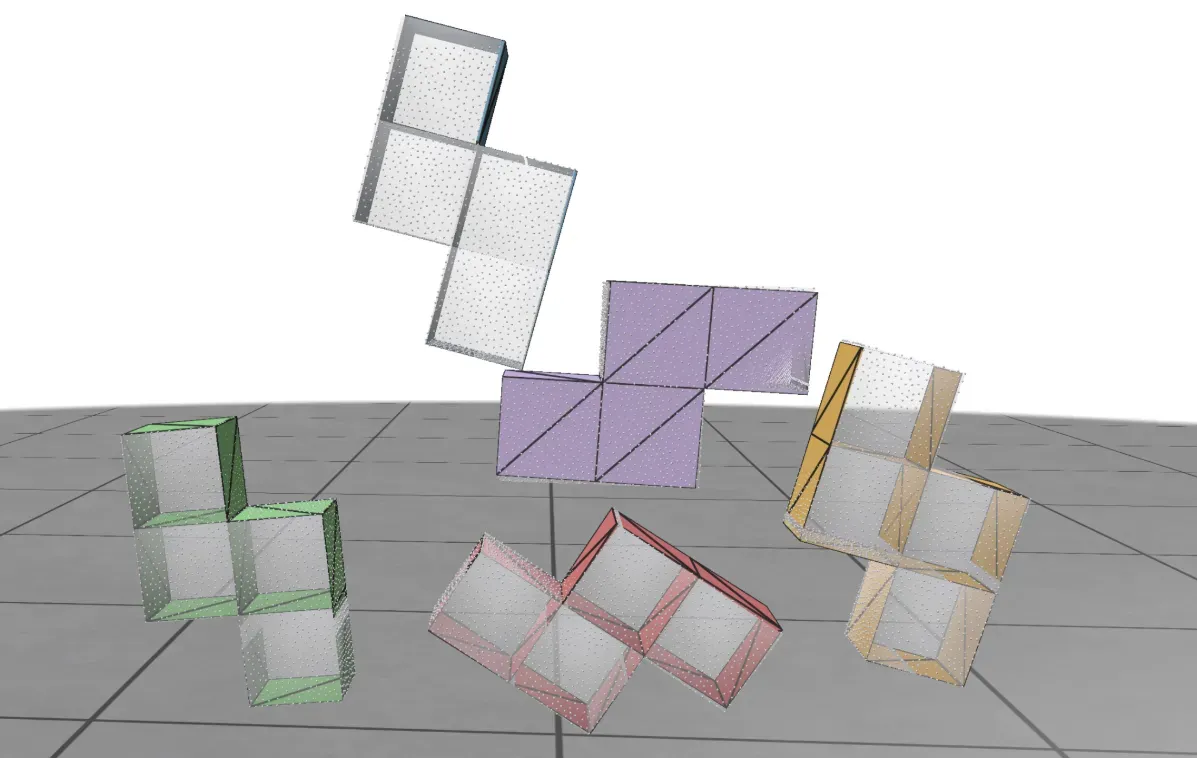

This week I made a major breakthrough in my research project. As I mentioned in HKUST PhD Chronicle, Week 18, Debugging PointNet++, I was struggling with poor performance when predicting the 6D pose. It turns out that replacing the backbone and fine-tuning the parameters significantly improved the performance. I’ve solved the single-object case and I am now looking toward the multi-object challenge!🤘

This is great progress, and I am super happy about it! 😆

The following is my weekly reflection.

A Review of PyTorch

In order to better understand how the loss function and optimizer really works, I take a lightening quick review of PyTorch. You can check it out here: How to Train and Use a Neural Network? The TL;DR.

A Glance at Point Transformer V3

I think this topic deserves a standalone blog post to explain the technical innovation behind the 📄Point Transformer V3: Simpler, Faster, Stronger. I really enjoy the idea of serialization.

In models like 📄PointNet++: Deep Hierarchical Feature Learning on Point Sets in a Metric Space or DGCNN, the points are typically treated as an std::unordered_set. In contrast, the Point Transformer V3 serializes the point using space-filling curves (like z-order curve or Hilbert curve).

Remark

By “serialization”, I literally mean at the memory address level! (i.e. using bitwise operation.)