The term “ball query” and “multi-scale grouping” were new to me when I first started reading the 📄PointNet++: Deep Hierarchical Feature Learning on Point Sets in a Metric Space paper. Here is my breakdown of what they mean and how they work.

Ball Query

To understand a ball query, we need to define 4 key components:

- : the source pointcloud

- : the target pointcloud

- : the search radius

- : an integer limit

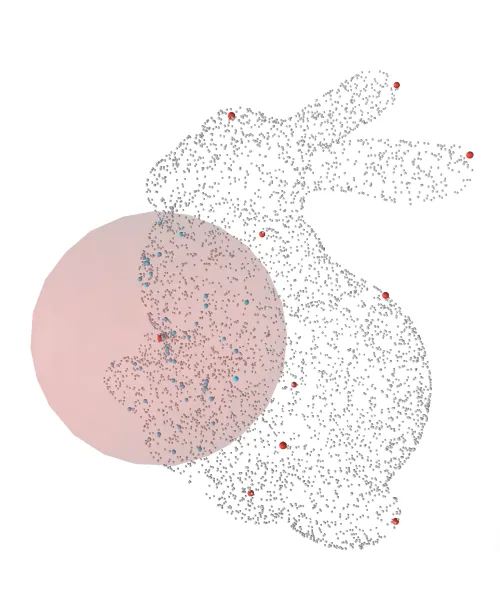

Suppose we have 2 pointclouds and , the ball_query operation performs the following step:

- iterate each point inside

- find up to neighboring points in that are within the radius .

The following is the 1st iteration.

So, why is this special?

Multi-Scale Grouping

I believe multi-scale grouping is best understood when paired with the concept of the ball query. A great analogy for this comes from 3Blue1Brown’s video on neural networks.

In his explanation of the MNIST dataset (classify handwritten digits), he illustrates how a number is constructed from smaller “chunks” or patterns. The idea of multi-scale grouping is similar. It is often described as a “density-adaptive feature learning approach”.

In code, it often looks like this:

'radius_per_layer': [[10, 20, 30], [30, 45, 60], [60, 80, 120], [120, 160, 240]]We can define different radii for each layer. The smallest layer can be thought of as capturing the “smallest learnable feature”. For example, I recently struggled with poor performance when using PointNet++ (see HKUST PhD Chronicle, Week 18, Debugging PointNet++). Part of the reason was that I had set an extremely small radius for learning the features of my 3D tetris shapes.

After I read the paper and analyze my object dimensions, I updated the radius configuration:

'radius_per_layer': [

[50, 75, 100],

[100, 150, 200],

[200, 250, 300],

[300, 450, 600]

],

Notice the first radius is 50mm. This is intentional because my 3D Tetris blocks are constructed from 50mm x 50mm “unit cube”. Therefore, the ball query at this scale will specifically try to learn this “corner feature” or “unit block” feature first.

As the radii get bigger, the network attempts to learn larger, more abstract features:

- 50mm: the corner/unit feature

- 100mm: the edge fearture

- 150mm: features of three-block clusters…

- …

- 500mm: features covering 3/4 scene

- 600mm: the global scene features

Remark

A fun fact. I only just learned that the plural of radius is radii. It is such a weird character combination! Uh..

Remark

If you enjoy this article, see also the What is Farthest Point Sampling (FPS)?.