I discovered the farthest point sampling(FPS) algorithm while reading the 📄PointNet++: Deep Hierarchical Feature Learning on Point Sets in a Metric Space paper. To appreciate the genius of this idea, we need to distinguish between 2 key concepts:

- index (the order of data in memory)

- geometric (the actual shape in 3d space)

Why does this distinction matter? In short, FPS aims to

- avoid the pitfalls of index-dependent sampling.

- preserve the geometric(“intrinsic”) representation of the pointcloud

Remark

See also the related article What is Ball Query and Multi-Scale Grouping?

How it works?

The logic of FPS is fairly straightforward:

- Pick a starting point.

- For each remaining point, calculate the Euclidean distance to the closest point that has already been sampled.

- Choose the point that is the farthest away.

- Add this point to the sampled set and repeat.

Why “random” isn’t good enough?

Relying on random indices to sample a pointcloud is risky because it ignores geometry.

“Random choice” blindly picks indices. Suppose we have a pointcloud the Stanford bunny, if the points are stored in a random order in memory, random sampling might work okay. But what if the data is sorted (e.g., ordered from )? Random sampling has no guarantee of coverage. It often leaves “holes” or clusters of points simply by chance.

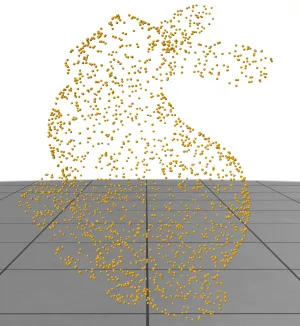

Take a look at the pointcloud below, sampled using random indices. The bunny looks weird and patchy.

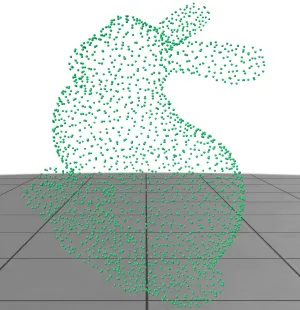

Now, look at the pointcloud downsampled via FPS. Notice how the ears and fine details are preserved much better.

This is the power of Farthest Point Sampling: it ensures we evenly cover the whole set!

Python implementation

Here is a script comparing the two methods using trimesh and fpsample library.

import trimesh

import numpy as np

import polyscope as ps

import fpsample

# Read the model

mesh = trimesh.load("models/SileaneBunny.obj")

# Sample the mesh into a point cloud with 10000 points

points, face_indices = trimesh.sample.sample_surface(mesh, 10000)

# Sort the point cloud along x->y->z axis (lexicographic sort)

sorted_indices = np.lexsort((points[:, 2], points[:, 1], points[:, 0]))

sorted_points = points[sorted_indices]

ps.init()

ps.set_front_dir("neg_y_front")

ps.set_up_dir("neg_z_up")

ps_cloud_full = ps.register_point_cloud("Full Point Cloud (10000)", sorted_points)

ps_cloud_full.set_radius(0.002)

# Use numpy to randomly sample indices to get a subset of 2048 points

random_indices = np.random.choice(len(sorted_points), size=2048, replace=False)

random_sampled = sorted_points[random_indices]

# Visualize the randomly sampled point cloud

ps_cloud_random = ps.register_point_cloud("Random Sampled (2048)", random_sampled)

ps_cloud_random.set_radius(0.003)

ps_cloud_random.set_color((1.0, 0.5, 0.0))

# Use fpsample library to perform Farthest Point Sampling (FPS)

fps_indices = fpsample.bucket_fps_kdline_sampling(sorted_points, 2048, h=5)

fps_sampled = sorted_points[fps_indices]

# Visualize the FPS sampled point cloud

ps_cloud_fps = ps.register_point_cloud("FPS Sampled (2048)", fps_sampled)

ps_cloud_fps.set_radius(0.003)

ps_cloud_fps.set_color((0.0, 0.8, 0.2))

ps.show()Existing implementation

I have found several implementations available in the PyTorch ecosystem if you want to use this in your models: